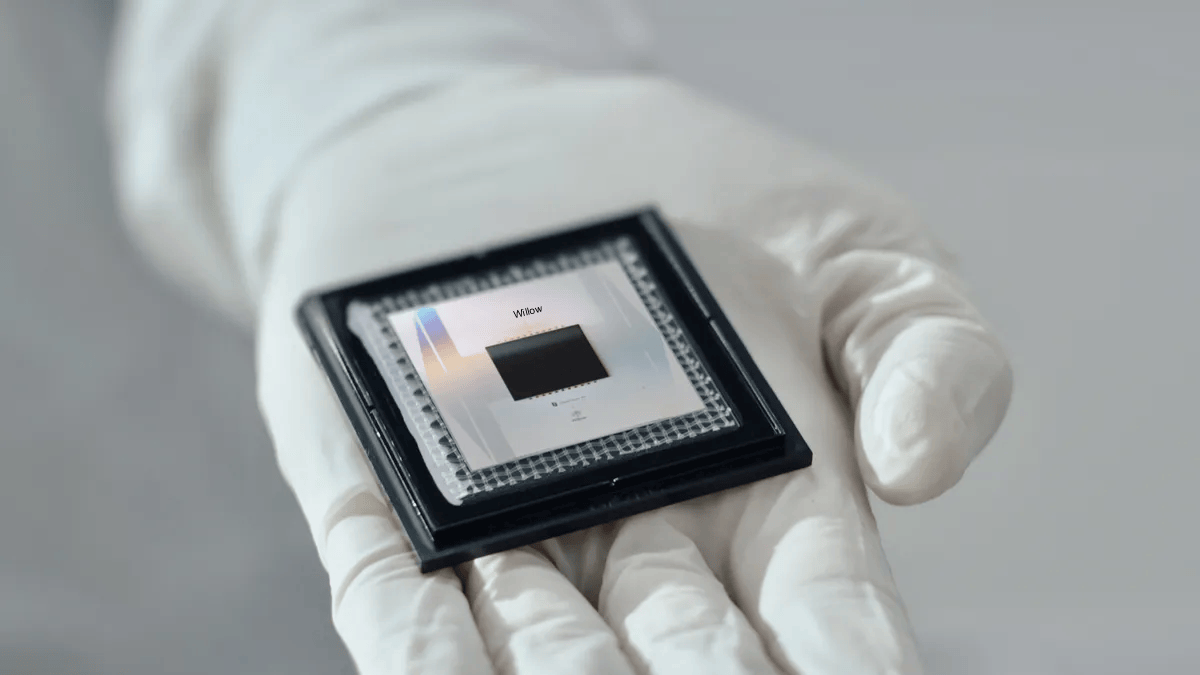

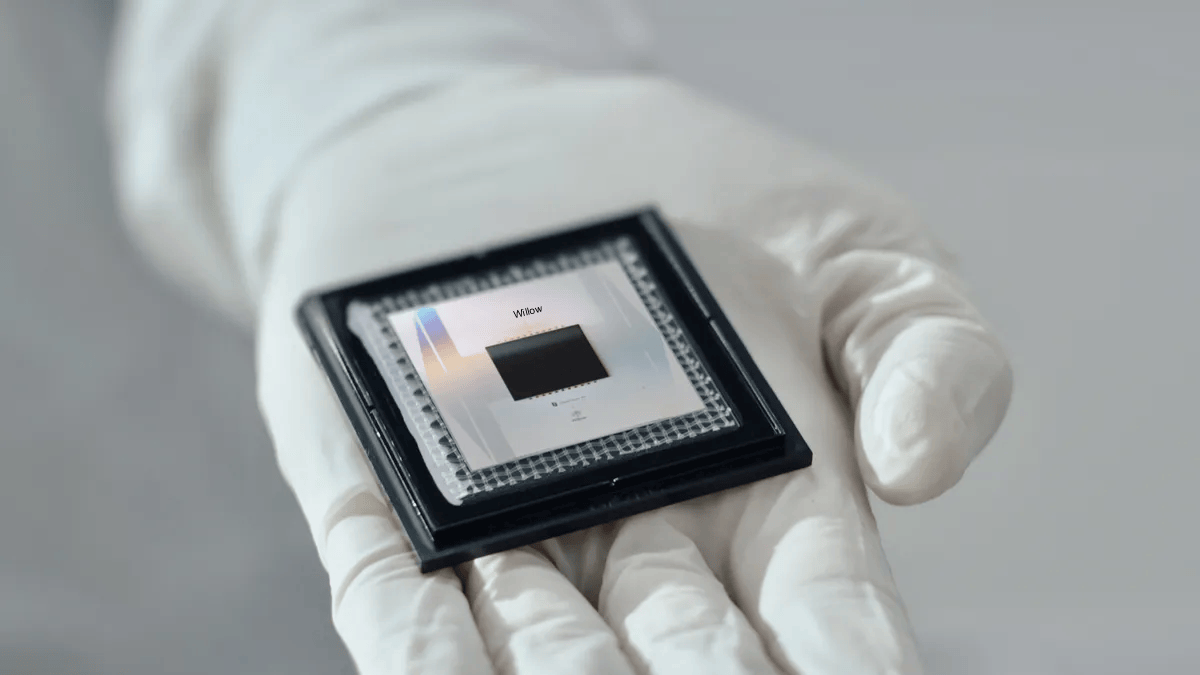

Google Quantum AI has unveiled the Willow superconducting quantum computing chip, marking a significant advancement in quantum technology. This innovative chip offers extended quantum coherence times, improved error correction, and computational power far beyond that of classical supercomputers. Willow represents a crucial milestone on the path toward scalable, error-corrected quantum systems with transformative potential across industries like pharmaceuticals and energy.

One of Willow’s key achievements is its ability to exponentially reduce errors as quantum processors scale up with more qubits, addressing a challenge in quantum error correction that has persisted for nearly three decades. Demonstrating its immense computational capabilities, Willow completed a standard benchmark computation in under five minutes—a task that would take the most powerful supercomputers 10 septillion years , a figure that dwarfs the age of the universe.

Willow surpasses the quantum error correction threshold, with logical qubits exhibiting longer lifetimes than physical qubits, marking a critical milestone for scalable quantum computing.

In a benchmark test, Willow demonstrated exponential computational power, completing tasks in minutes that would take classical supercomputers longer than the age of the universe./p>

Innovative hardware features, such as tunable qubits and advanced software calibration, enhance connectivity, reduce error rates, and ensure consistent performance across the chip.

Willow’s advancements have fantastic potential in industries like pharmaceuticals, energy storage, and fusion power, while paving the way for scalable, real-world quantum applications.

Pharmaceutical Innovation: Quantum computing could transform drug discovery by simulating molecular interactions with unparalleled accuracy. This capability has the potential to significantly reduce development timelines and costs, accelerating the creation of new treatments.

Energy Storage Optimization: In the field of battery development, quantum systems could identify and optimize materials for energy storage, leading to more efficient and sustainable solutions. This advancement could play a key role in addressing global energy challenges.

Fusion Energy Research: Quantum technology may enable precise modeling of complex plasma dynamics, a critical factor in advancing fusion energy research. By providing deeper insights into these processes, quantum computing could accelerate progress toward sustainable fusion power.

Quantum computers are inherently "noisy," meaning that, without error-correction technologies, every one in 1,000 qubits — the fundamental building blocks of a quan computer — fails. It also means coherence times (how long the qubits can remain in a superposition so they can process calculations in parallel) remain short. By contrast, every one in 1 billion billion bits fails in conventional computers.

To achieve this "below threshold" milestone — means that errors in a quantum computer will reduce exponentially as you add more physical qubits. It charts a path for scaling up quantum machines in the future. The technology relies on logical qubits. This is a qubit encoded using a collection of physical qubits in a lattice formation. All the physical qubits in a single logical qubit share the same data, meaning if any qubits fail, calculations continue because the information can still be found within the logical qubit.

The Google scientists built sufficiently reliable qubits for exponential error reduction by making several shifts. They improved calibration protocols, improved machine learning techniques to identify errors, and improved device fabrication methods. Most importantly, they improved coherence times while retaining the ability to tune physical qubits to get the best performance.

The Google researchers tested Willow against the random circuit sampling (RCS) benchmark, which is now a standard metric for assessing quantum computing chips. In these tests, Willow performed a computation in under five minutes that would have taken today's fastest supercomputers 10 septillion years. This is close to a quadrillion times longer than the age of the universe.

The first edition of the Willow QPU can also achieve a coherence time of nearly 100 microseconds — which is five times better than the showing from Google's previous Sycamore chip.

Next, the team wants to create a "very, very good logical qubit" with an error rate of one in 1 million. To build this, they would need to stitch together 1,457 physical qubits, they said.

Unlike LLMs, which are trained on vast amounts of text data to understand and generate human-like language, LQMs are specifically trained to handle numerical reasoning and complex calculations.

Specialized datasets: LQMs are trained on large datasets of mathematical problems, financial models, and statistical analyses. This focused training allows them to develop a deep understanding of quantitative concepts and relationships.

Mathematical formulations: Instead of learning language patterns, LQMs learn to recognize and apply mathematical formulas, statistical methods, and numerical algorithms.

Precision-focused learning: The training emphasizes accuracy in calculations and numerical outputs, prioritizing precision over the more flexible, context-based understanding seen in LLMs.

Domain-specific knowledge: LQMs often incorporate domain-specific knowledge in areas like finance, physics, or engineering, allowing them to tackle specialized quantitative problems.

Symbolic reasoning: Many LQMs are designed to perform symbolic manipulation, allowing them to work with algebraic expressions and equations in ways that traditional LLMs cannot.

Large quantitative models are trained via two primary deep learning architectures: a variational auto-encoder (VAE), and a generative adversarial network (GAN). Both utilize extensive neural networks that are trained in pattern recognition. Together, they are known as a VAE-GAN learning architecture. Let’s look at the “VAE” and the “GAN” components individually. In an LQM, the variational auto-encoder compresses complex data into a structured latent space, capturing essential patterns and relationships. This allows for efficient exploration and manipulation of data representations.

VAEs can impute missing values based on learned latent representations. GANs can generate realistic replacements for missing data points. Combined effect: LQMs can more effectively handle datasets with missing or incomplete information, a common challenge in real-world quantitative analysis.

In an LQM, the variational auto-encoder compresses complex data into a structured latent space, capturing essential patterns and relationships. This allows for efficient exploration and manipulation of data representations. The generative adversarial network (GAN) then refines this data by generating high-quality, realistic outputs from the latent space. Together, the VAE's efficient data compression and the GAN's ability to produce realistic data enable LQMs to excel in complex quantitative tasks, such as predictive modeling, scenario analysis, and risk assessment.

In autonomous vehicles, AI agents manage navigation, obstacle detection, and traffic rule compliance. They process data from sensors and cameras in real-time to make driving decisions, ensuring safe and efficient travels. These AI systems continuously learn and adapt to various driving conditions, improving their reliability and performance over time. Additionally, AI agents in autonomous vehicles contribute to advancements in traffic management by communicating with other vehicles and infrastructure. This collective intelligence can optimize traffic flow and reduce congestion. Also drones and other airbourne vehicles.

Risk management: analyze market data to identify potential risks and simulate various economic scenarios. This helps banks and investment firms make more informed decisions about portfolio allocation and risk exposure. Algorithmic trading: process real-time market data to identify trading opportunities and execute trades at optimal times, potentially improving returns and reducing risks. Fraud detection: By analyzing patterns in transaction data, LQMs will flag suspicious activities more accurately than traditional rule-based systems, helping financial institutions combat fraud more effectively.

Drug discovery: analyze molecular structures and biological data to predict potential drug candidates, potentially accelerating the drug discovery process and reducing costs. Patient outcome prediction: By processing patient data, treatment histories, and genetic information, LQMs will predict patient outcomes and suggest personalized treatment plans ... and so on ...